Running PHI-3 on Windows

Phi-3 Mini: A Guide to Local AI with OllamaIn the ever-evolving landscape of artificial intelligence, Microsoft's Phi-3 Mini model stands out as a lightweight yet powerful SLM. With an impressive 3.8 billion parameters, Phi-3 Mini is ready to tackle a wide range of AI tasks. In this guide, we'll explore how to run Phi-3 Mini locally using Ollama, a simple desktop application designed for model deployment.

Installation

Ollama - Is an fanstastic tool for running AI models locally. It is a desktop application that allows you to run AI models on your local machine.Download the application from the Ollama website

I run Windows 11 on my machine, so I downloaded the Windows version (this is still preview).

Run through the simple Installation steps and you are ready to go.

Running Ollama

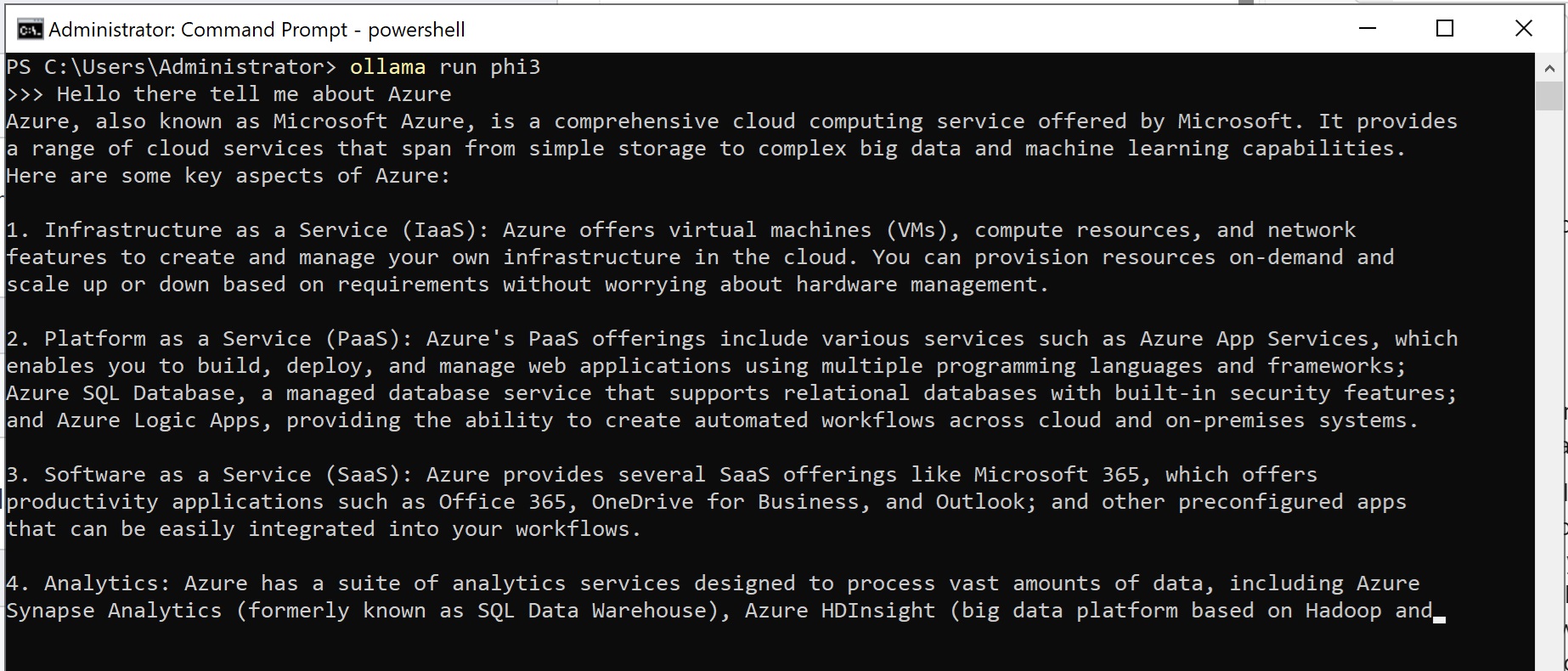

After installation Ollama will be running but can be started In the terminal sessionollama serve

Download the PHI-3 Model

ollama pull phi3

Start the PHI-3 Model

ollama run phi3

And thats it you are up and running with the PHI-3 Model on your local machine.

Running Ollama

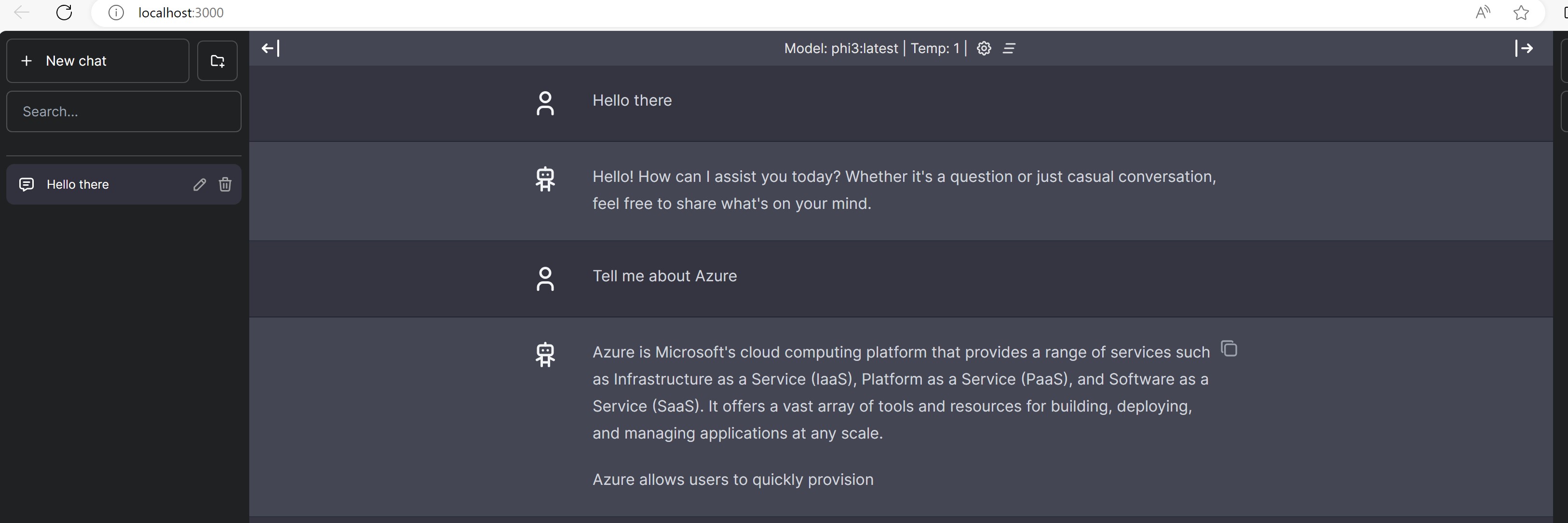

The chatbot-ollama allows for a web-based interface similar to ChatGPT. To run the chatbot-ollama, you can use the following command: docker run -p 3000:3000 ghcr.io/ivanfioravanti/chatbot-ollama:main

© 2026 - The Azure Shack - AI generated content throughout testing CoPilot Chat, OpenAI on Azure and Microsoft Designer