RagApp

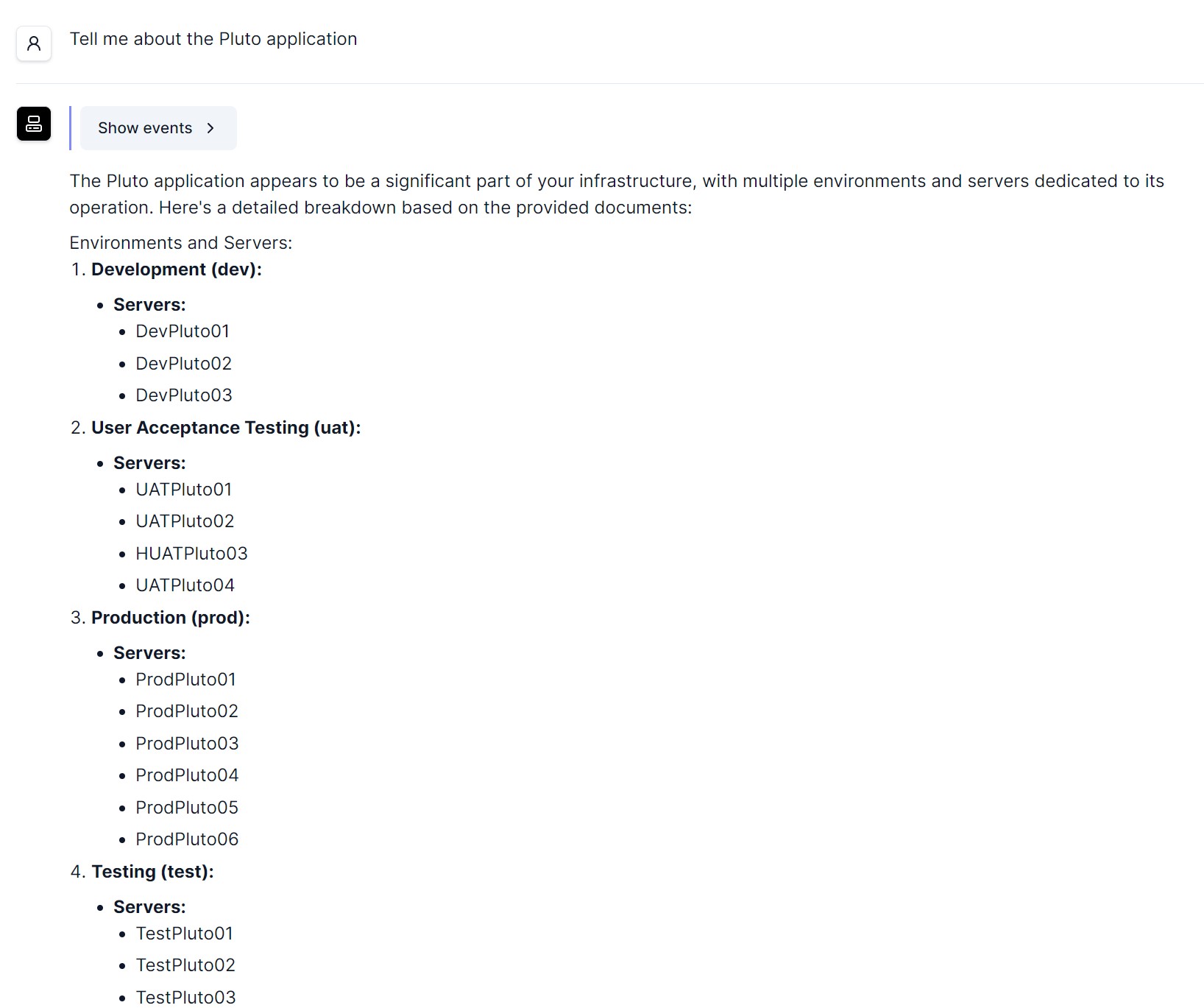

I have been playing with LLMs for about a year now and have been using them to chat to docs. I have been using various accelerators but always wanted a simple interface for LLM conversations and to also chat to documents. I was recommended a great app its called RagApp. It is a simple interface that allows you to interact with LLMs in a more intuitive way. It is a simple and easy-to-use interface that makes it easy to experiment with LLMs and SLMs running locally on Ollama In my example below I will be using a simple RAG application with data loaded in from Azure Migrate. I am testing can the combination of Azure Migrate and LLMs be used to streamline the application discobvery process.The source code for the application and the docker image can be found on the RAgAPP github page: RagApp

Installation

- I am just running the application as a docker container

- One docker is installed on your machine, you can run the following command to start the application:

- docker run -p 8000:8000 ragapp/ragapp

URLs

- Admin: http://127.0.0.1:8000/admin/

- Chat UI: http://localhost:8000

- API: http://localhost:8000/docs

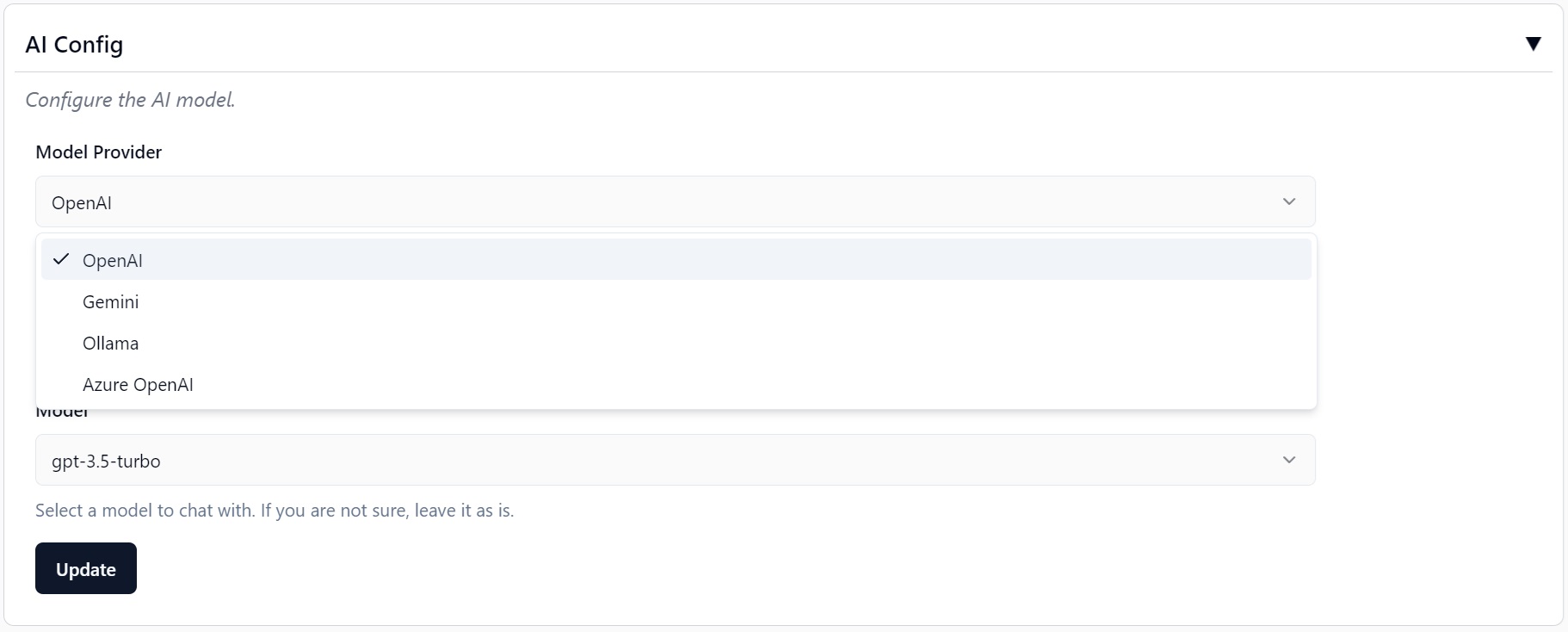

A wide variety of LLMs are supported by RagApp - I am currently using Azure OpenAI with GPT4o and text-embedding-large

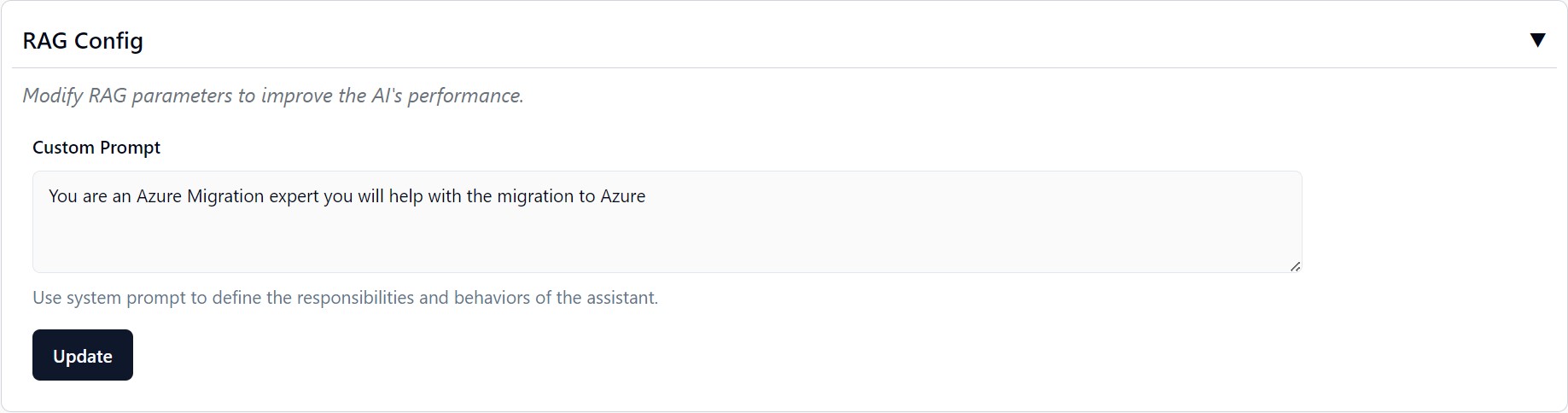

System Prompt - Use system prompt to define the responsibilities and behaviors of the assistant.

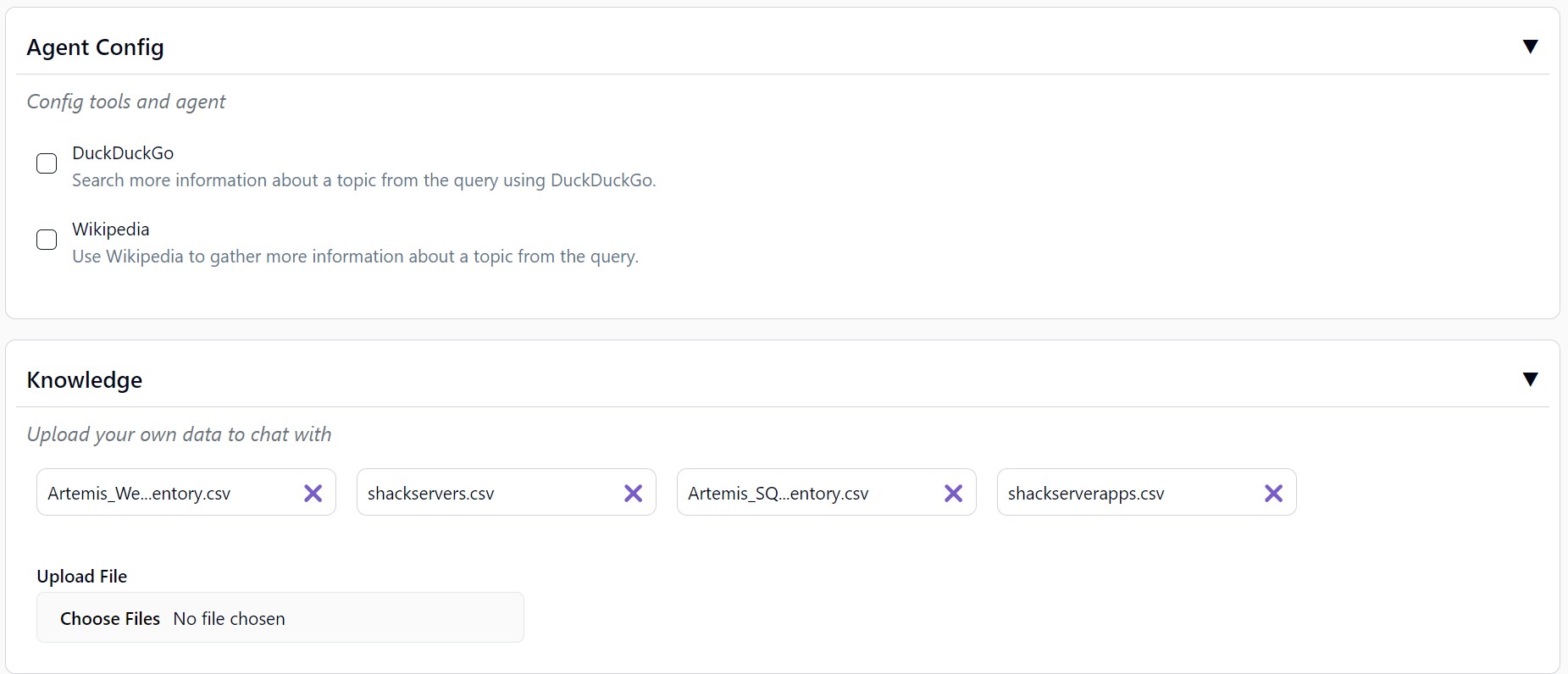

In this example I have test data from Azure Migrate and simulated data from a CMDB

A very quickly thrown together RAG based application

© 2026 - The Azure Shack - AI generated content throughout testing CoPilot Chat, OpenAI on Azure and Microsoft Designer